How to extract data from website with login to Excel

Excel is a widely accessible tool for data collection and analysis, offering features such as sorting, filtering, and chart creation. When working with well-structured datasets, it also supports more advanced techniques like pivot tables and regression analysis.

That said, manually gathering data—through repetitive typing, searching, copying, and pasting—is both time-consuming and error-prone. To overcome this, we will examine different approaches to efficiently extract data from websites into Excel, including strategies for handling sites that require authentication.

From Website to Excel: Working Tips & Solutions for 2026

Looking for a quick solution to pull data from websites—even login-protected ones—straight into Excel?

Here’s your cheat sheet of tips, shortcuts, and solutions to extract data from website with login to Excel.

| Problem / Cause | How to Fix It |

| Need to scrape large amounts of data but have no coding skills | Use no-code scraping tools (ParseHub, WebHarvy, Import.io). Point-and-click setup, auto-detection, export directly to Excel. |

| Website requires login authentication (username, password, sessions) | Use scraping tools with login support, reuse session cookies, or outsource to professional scraping services. If API is available, prefer API access. |

| Data appears in simple static HTML tables | Use Excel Web Queries: Data → Get External Data → From Web → Select table → Import into Excel. |

| Need custom, automated workflows inside Excel | Write Excel VBA macros: send HTTP requests, parse HTML, and loop through pages. Best for programmers. |

| Website uses JavaScript / dynamic content | Use headless browser automation (Selenium, Puppeteer). Simulates a real browser, then export results to Excel. |

| Website protected with CAPTCHAs | Solve manually during scraping, or use CAPTCHA-solving APIs (e.g., 2Captcha). For recurring scraping, prefer official APIs. |

| Site uses Multi-Factor Authentication (MFA) | Enter MFA manually if scraping occasionally. For repeated tasks: reuse session cookies or check if an official API is available. |

| Extracted data is messy / inconsistent (duplicates, wrong formats, extra characters) | Clean in Excel (TRIM, CLEAN, Remove Duplicates, Power Query) or automate cleaning with Python (Pandas). |

| Scraping at large scale with IP blocks or anti-bot detection | Use proxies, IP rotation, Multilogin, or outsource to scraping services for enterprise-level solutions. |

| Task is too time-consuming to manage in-house | Hire professional scraping services that deliver ready-to-use Excel datasets (price monitoring, competitor analysis, lead generation). |

Method 1: No-code crawler to extract data from website with login to Excel

Web scraping is one of the most versatile ways to collect different types of data from web pages and transfer them into Excel files. While many users find web scraping difficult due to limited coding knowledge, there are user-friendly tools that make it possible to extract data without writing any code.

Popular no-code web scraping tools include:

- ParseHub – An intuitive tool that supports complex data extraction with point-and-click setup.

- WebHarvy – A visual scraper that automatically identifies patterns in web pages for easier data collection.

- Import.io – A robust platform that allows users to convert website content into structured datasets with minimal effort.

These tools often provide features like AI-powered auto-detection, allowing data to be identified and extracted automatically. In most cases, your role is simply to review the results and make small adjustments. More advanced options may also include functionalities such as API access, IP rotation, cloud-based services, and scheduled scraping, which can help gather more comprehensive datasets.

Although video tutorials can be useful, you can also follow simple step-by-step instructions to scrape website data into Excel without coding.

For a broader overview of available scraping tools and how they can simplify data extraction, see our dedicated blog article.

Online data scraping templates

e-designed data scraping templates make it easy to collect information from popular platforms such as Amazon, eBay, LinkedIn, and Google Maps. With just a few clicks, these templates allow you to extract structured data directly from webpages. Many services also offer online scraping templates that can be used right in your browser, meaning you don’t need to install any software on your device.

3 Steps to Scrape Data from a Website to Excel

Step 1: Enter the target website URL to begin auto-detection

Start by pasting the URL of the website you want to scrape into a no-code web scraping tool such as ParseHub, WebHarvy, or Import.io. Many of these platforms include auto-detection features that can recognize data fields on a webpage and generate an initial scraping workflow for you. Tools like Multilogin can also be used alongside scraping solutions to manage browser fingerprints and reduce the risk of detection by anti-bot systems.

Step 2: Customize the data fields you want to extract

Once the auto-detection process is complete, you can review the suggested workflow and adjust the data fields according to your needs. Most scraping tools include simple point-and-click interfaces, making it easy to refine the extraction process without coding. Some platforms also provide built-in tips or guides to help with customization.

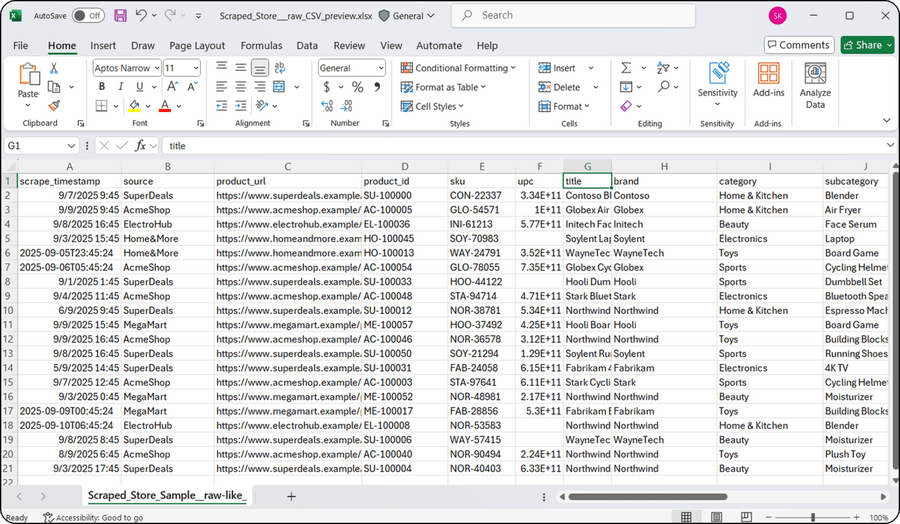

Step 3: Export scraped website data to Excel

After verifying the data fields, run the scraping task. The collected data can then be downloaded directly in Excel or CSV formats, or exported to a connected database or cloud storage service, depending on the tool you use.

Web Scraping project customer support

If handling scraping tasks in-house is too time-consuming, you can outsource the process to a specialized data extraction team. Professional services can help you navigate challenges like anti-scraping measures, CAPTCHAs, and IP blocking. The result is a clean, structured dataset delivered in Excel or another format tailored to your needs.

Businesses across industries often rely on these services for price monitoring, competitor analysis, lead generation, and market research, enabling them to focus on core activities while ensuring high-quality data collection.

To better understand the fundamentals of web scraping—how it works, why it matters, and when to use it—see our comprehensive overview here.

Method 2: Use Excel web queries to extract data from website with login to Excel

Beyond manual copying and pasting, Excel Web Queries offer a way to directly import tables from web pages into your spreadsheet. This feature can automatically identify tables embedded within a web page’s HTML structure.

Web Queries can also be useful in situations where establishing or maintaining a standard ODBC (Open Database Connectivity) connection proves difficult. You can directly scrape a table from any website using Excel Web Queries.

Overall, this method is effective for extracting simple, static tables without requiring additional software.

6 Steps to extract website data using Excel web queries

Step 1:

Go to Data > Get External Data > From Web in Excel.

Step 2:

A browser window titled New Web Query will open.

Step 3:

Enter the web address in the address bar.

Step 4:

The page will load, and yellow arrow icons will appear next to data or tables that Excel can recognize.

Step 5:

Select the table you want to extract.

Step 6:

Click Import to pull the selected data into your worksheet.

Your chosen web data will now be imported into Excel, neatly arranged in rows and columns.

When to use web queries

Excel Web Queries are best suited for:

- Websites that present data in well-structured HTML tables.

- Scenarios where you need a quick way to capture data without extra tools.

- Users who prefer to stay within Excel rather than rely on third-party software.

Method 3: Use Excel VBA to extract data from website with login to Excel

For users with some programming experience, Excel’s Visual Basic for Applications (VBA) provides the ability to write macros that can directly scrape data from websites.

Most Excel users are comfortable with formulas like =AVG(), =SUM(), or =IF(). However, fewer are familiar with VBA—Excel’s built-in programming language, often referred to as macros. Excel files containing macros are saved with the .xlsm extension.

Before you can use VBA, you’ll need to enable the Developer tab in the Excel ribbon:

- Right-click anywhere on the ribbon → Customize Ribbon → check Developer.

Once enabled, you can design your scraping layout and write VBA code within the developer interface. This code can be linked to specific actions or events to automate the data collection process.

⚠️ Important Note:

Using VBA for scraping is highly technical and generally not recommended for non-programmers. Macros work as step-by-step procedures written in Excel Visual Basic, and while they are powerful, they require both coding knowledge and troubleshooting skills.

What VBA scraping can achieve

With Excel VBA, you can automate many aspects of web data extraction, including:

- Sending HTTP requests to download and access webpage content.

- Parsing HTML structures to locate and extract specific pieces of information.

- Automating imports from multiple pages or entire websites without manual repetition.

- Exporting results directly into Excel cells, where the data can be further cleaned, analyzed, or visualized.

6 steps to extract data from website with login to Excel using VBA

Step 1: Open the Visual Basic Editor

In Excel, press ALT + F11 to open the Visual Basic for Applications (VBA) editor. Insert a new module where you will write your scraping code.

Step 2: Import Web Libraries

Enable references to MSXML2 (for sending HTTP requests) and MSHTML (for parsing HTML documents). These libraries allow Excel to interact with websites.

Imports MSXML2

Imports MSHTMLStep 3: Declare Variables

Define objects for handling HTTP requests and the HTML document:

Dim xmlHttp As MSXML2.XMLHTTP60

Dim html As MSHTML.HTMLDocumentStep 4: Send a Request and Load HTML

Use the XMLHTTP object to perform a GET request, then pass the response into an HTML document:

Set xmlHttp = New MSXML2.XMLHTTP60

xmlHttp.Open "GET", "https://website.com", False

xmlHttp.send

Set html = New MSHTML.HTMLDocument

html.body.innerHTML = xmlHttp.responseTextStep 5: Extract Data and Export to Excel

Navigate the DOM to extract the desired element(s), then write the results directly into Excel cells:

Dim data As String

data = html.getElementById("element").innerText

Cells(1, 1) = dataStep 6: Clean Up and Repeat if Needed

Release objects for memory efficiency. If you need to scrape multiple pages, loop through URLs and repeat the process.

⚠️ Note: This approach works best for static websites. For pages with dynamic content (e.g., JavaScript-rendered elements), VBA may not capture the data without additional handling.

How to handle complex logins in web scraping

Many websites use stronger login methods like multi-factor authentication (MFA), CAPTCHAs, and dynamic forms. These add security but make automated scraping more difficult.

Multi-factor authentication (MFA):

- Manual entry: Enter MFA codes yourself for occasional scraping.

- API access: Use official APIs if available to avoid MFA prompts.

- Session cookies: Log in once, capture cookies, and reuse them until they expire.

⚠️ Automating MFA bypass is generally discouraged for security, ethical, and legal reasons unless explicitly allowed through official channels.

How to handle CAPTCHAs and reCAPTCHAs in web scraping

CAPTCHAs are designed to block automated access, ranging from simple text recognition to image puzzles (reCAPTCHA v2) and invisible checks (reCAPTCHA v3).

Ways to handle them:

- Manual Solving: Practical for small-scale scraping by pausing your script and solving CAPTCHAs yourself.

- CAPTCHA Solving Services: Tools like 2Captcha or Anti-Captcha use APIs to return solutions, but add costs and third-party dependencies.

- Headless Browser Automation: Using tools such as Selenium can sometimes bypass simpler CAPTCHAs by simulating a real browser.

- Machine Learning (Advanced): Training a custom model is possible for consistent CAPTCHA types, but it’s highly complex and rarely worth the effort.

⚠️ Keep in mind that bypassing CAPTCHAs may violate a site’s terms of service, so always proceed responsibly.

Data cleaning and preparation for Excel

After extracting data from a website, the raw output is often messy and not ready for immediate analysis. Cleaning and preparation ensure accuracy, consistency, and usability in Excel.

Why It Matters

- Inconsistent Formats: Dates, numbers, or text may appear differently (e.g., 1/1/2026 vs. Jan 1, 2026).

- Missing Values: Empty cells, “N/A,” or placeholders can distort results.

- Duplicates: Scraping may capture the same entry multiple times.

- Irrelevant Characters: Extra spaces, HTML tags, or special symbols clutter data.

- Wrong Data Types: Numbers may import as text, preventing calculations.

Key Cleaning Steps

- Remove Duplicates: Use Excel’s built-in tool or Pandas drop_duplicates().

- Handle Missing Values: Delete, impute (average, median, placeholder), or leave them if meaningful.

- Standardize Formats:

- Dates → YYYY-MM-DD

- Numbers → remove symbols/commas

- Text → consistent case, trim spaces

- Remove Noise: Apply Excel’s TRIM() or CLEAN(), or regex in Python to strip HTML/special characters.

- Split/Combine Columns: Break apart full names, or merge related data fields.

- Correct Data Types: Ensure numbers, dates, and text are properly recognized.

Tools for Cleaning

- Excel: Functions like TRIM, CLEAN, REPLACE, Remove Duplicates, and Power Query.

Python (Pandas): Automates complex tasks with functions like dropna(), fillna(), replace(), and to_datetime().

import pandas as pd

df = pd.DataFrame({

'Name': [' Alice ', 'Bob', 'Charlie'],

'Value': ['1,000', '2,500', 'N/A'],

'Date': ['2023-01-01', 'Jan 2, 2023', '2023/01/03']

})

df['Name'] = df['Name'].str.strip()

df['Value'] = pd.to_numeric(df['Value'].str.replace(',', ''), errors='coerce')

df['Date'] = pd.to_datetime(df['Date'])

print(df)Which method should you choose?

The best approach for extracting website data into Excel—especially from login-protected sites—depends on your technical skills, the complexity of the site, and how often you need to run the extraction.

| Method | Ease of Use | Best For | Requires Coding? | Handles Complex Sites? |

| No-Code Scraping Tools (e.g., ParseHub, WebHarvy, Import.io) | Very Easy | Beginners, bulk scraping, sites with login/authentication | No | Yes |

| Excel Web Queries | Easy | Simple HTML tables, public data | No | No |

| Excel VBA | Moderate | Programmers, custom automation | Yes | Yes |

Final thoughts

Extracting website data into Excel is a powerful skill for analysis, research, and decision-making. From no-code tools for beginners to VBA and Python for advanced users, each method offers unique strengths.

When choosing your approach, consider:

- Site Complexity: Does the site use logins, CAPTCHAs, or dynamic content?

- Frequency: Is this a one-time task or an ongoing workflow?

- Your Skills: Do you prefer a no-code tool, or are you comfortable programming?

No matter the method, always practice responsible scraping by respecting terms of service, robots.txt rules, and rate limits. And don’t overlook data cleaning and preparation—a crucial step to ensure accuracy and usability in Excel.

With the right method and workflow, you can reliably transform web data into structured, actionable insights.