How to Scrape Craigslist in 2026: Best Tools + 4 Effective Methods

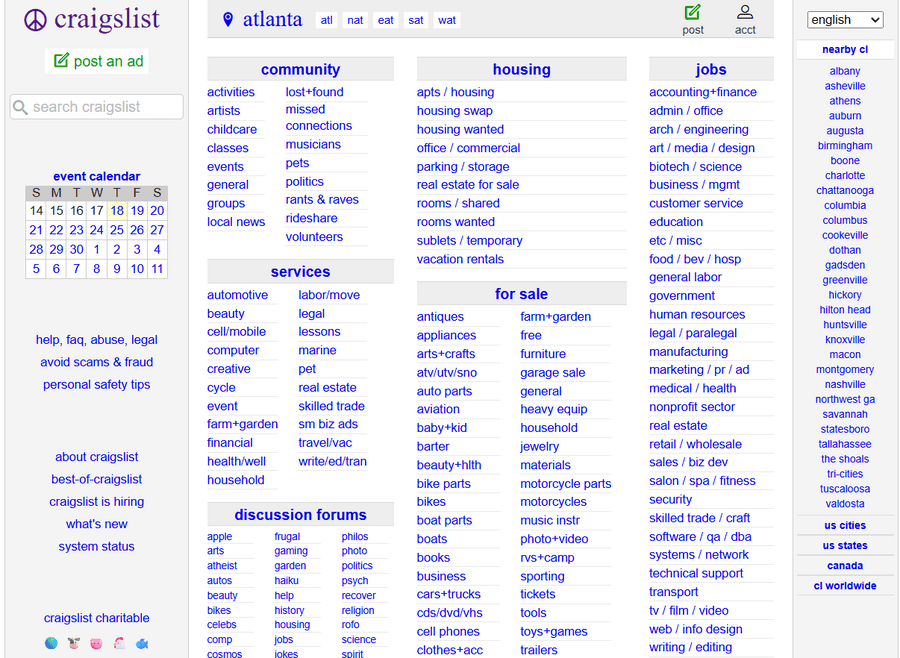

Craigslist remains one of the largest and most popular classified websites in the United States, offering a vast array of listings ranging from housing and jobs to free stuff and local services. For businesses, researchers, and individuals alike, extracting data from Craigslist can unlock valuable insights and opportunities.

This comprehensive guide dives deep into Craigslist scraping, covering everything from definitions and legal considerations to top tools, API alternatives, automation techniques, and how to search all Craigslist USA regions effectively.

Before diving deeper into Craigslist scraping, it’s helpful to first understand the basics of what web scraping is and how it works in our detailed article.

Best Tools for Craigslist Scraping in 2026

When it comes to extracting data from Craigslist, choosing the right tool makes all the difference. Here is a breakdown of the top tools you can use to scrape Craigslist:

| Tool/Method | Description |

| Python-based Scraping (Scrapy, BeautifulSoup) | Build custom Craigslist crawlers using Python libraries. Extract posts, prices, locations, images, and more. |

| Multilogin | Anti-detect browser that lets you manage multiple scraping sessions with unique browser fingerprints. |

| Browser Extensions/Plugins | Simple add-ons that scrape visible Craigslist data directly in the browser. |

| Unofficial Craigslist APIs & Data Aggregators | Third-party APIs and platforms that expose Craigslist data in structured form. |

What is Craigslist Scraping?

Craigslist scraping is the automated process of collecting information from Craigslist listings using software (often called scrapers or crawlers). Instead of clicking through pages by hand, a scraper visits category and search pages, follows listing links, and extracts structured fields—e.g., post ID, title, price, location/region, date, description, images (or image URLs), seller type, and category.

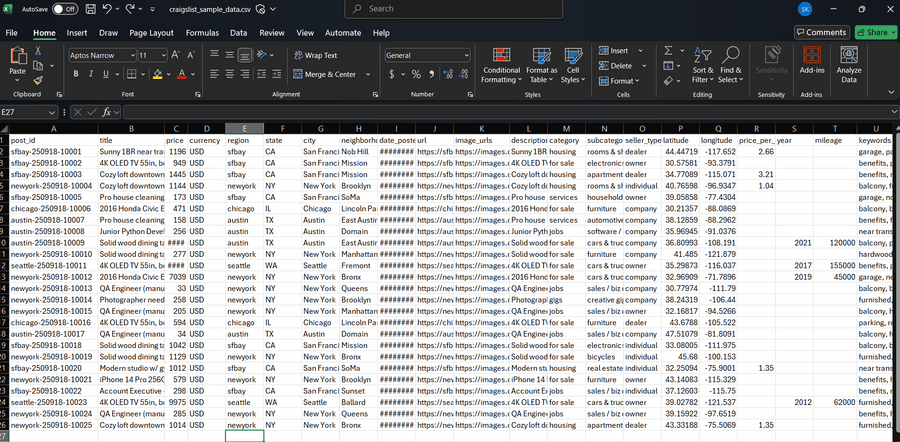

That raw data is then normalized (clean field names and formats), enriched (e.g., geocoding locations to lat/long, inferring categories, deduplicating repeated posts), and saved to spreadsheets, CSV/Excel files, or databases so it can be filtered, charted, and queried at scale.

Put simply: scraping turns messy, page-by-page browsing into a clean dataset you can analyze, automate, and act on.

Why Scrape Craigslist Data?

1. Structure data for analysis

Craigslist pages mix text, images, and metadata. Scraping converts that into rows and columns, making comparisons trivial—e.g., average rent by neighborhood, median price for used laptops by year/spec, or week-over-week supply changes for specific models. Once it’s in CSV/Excel, you can sort, pivot, chart, and join with your own data.

2. Generate qualified leads

Many categories (real estate, automotive, services, gigs, for-sale) are rich lead sources. A scraper can pull new posts that match your criteria (budget, keywords, location radius), extract contact info when available, and route results to your CRM or a simple Google Sheet—helping sales or recruiting teams respond quickly while opportunities are fresh.

3. Market research & competitor monitoring

With regular crawls, you can track pricing strategies, listing velocity, and availability across cities or regions. For example: monitor how quickly certain rentals get taken down, see how service providers adjust pricing, or measure seasonal demand for specific product types.

4. Identify resale/arbitrage opportunities

Clean, structured data makes it easier to spot underpriced items, negotiate confidently, and set target margins. (Note: reselling can land in a gray area depending on category and local norms—use judgment and comply with applicable rules.)

5. Automate repetitive work

Instead of re-running the same searches every day, schedule crawls and push results to your destination of choice (CSV, database, Slack/Email alerts). You can de-duplicate by post ID, highlight only new or changed listings, and trigger follow-up workflows automatically.

6. Compare across regions at scale

Craigslist is segmented by city/area. Scraping lets you unify multiple regional sites into one dataset so you can:

- Run the same query across dozens of markets,

- Normalize locations to a common schema, and

- Aggregate results for a nationwide view.

7. Operational visibility for teams

Teams can tag, annotate, and share scraped datasets to coordinate outreach, track status (contacted / pending / closed), and maintain a history of market changes over time.

Typical Outputs From a Good Scrape

- Listing table with post ID, title, price, region, date, URL, image URLs, and description text.

- Derived fields like category/subcategory, normalized neighborhood, price per sq. ft. (housing), year/mileage (autos), or keyword hits.

- Dashboards/alerts highlighting new matches, price drops, or removed/expired listings.

If you’re interested in scraping other platforms, check out our guide on OnlyFans scraping with FanScraper.

Legal Considerations of Craigslist Scraping

Before diving into web scraping Craigslist, it’s crucial to understand the legal landscape.

- Craigslist’s Terms of Service: Craigslist explicitly prohibits the use of robots, spiders, scrapers, and crawlers to extract data.

- API Limitations: The official Craigslist API is designed for posting data, not for data extraction.

- Legal Precedents: Craigslist has taken legal action against companies that scraped their data for commercial gain, resulting in multi-million dollar judgments.

- Personal Use vs. Commercial Use: Scraping public data discreetly for personal, non-commercial use is generally tolerated, but commercial scraping can lead to legal consequences.

- Respect Rate Limits: To avoid IP bans or legal issues, scrape at a moderate frequency and respect Craigslist’s robots.txt file.

Top Craigslist Scraping Tools and Methods

1. Multilogin Antidetect Browser

For scaling Craigslist scraping while minimizing blocks, Multilogin lets you run multiple isolated browser profiles that mimic real users and can be automated.

- Create isolated antidetect profiles with unique fingerprints (Canvas/WebGL/WebRTC, UA, timezone/locale).

- Built-in proxy management: assign HTTP(S)/SOCKS5 proxies per profile; rotate or reuse as needed.

- Automation-ready: control via REST API (e.g., with Postman) and drive profiles using Selenium or Puppeteer.

- Persistent sessions: cookies/cache saved per profile for long-lived logins.

- Optional team sharing and access controls for collaboration.

Pros: Scales reliably, reduces blocks, per-profile proxies, works with Selenium/Puppeteer automation.

Cons: Paid tool; setup/learning curve.

2. Python-Based Scraping (Craigslist Crawler)

For developers, Python libraries like Scrapy and BeautifulSoup offer powerful ways to build custom craigslist crawlers. These tools allow you to:

- Target specific Craigslist categories and regions.

- Extract detailed data fields such as post ID, title, price, and images.

- Export data into CSV, Excel, or databases.

Pros: Highly customizable, free, and open-source.

Cons: Requires coding skills and maintenance.

3. Preset Templates and No-Code Tools

For non-coders, tools like ParseHub, WebHarvy, Data Miner, and Simplescraper provide preset Craigslist scraper templates that simplify the process:

- Automatically detect and extract data fields.

- Support exporting to Excel, CSV, or databases.

- Allow customization of scraping workflows without coding.

Pros: User-friendly, fast setup.

Cons: May have limitations on complex scraping tasks.

4. Browser Extensions and Plugins

Some browser extensions offer basic Craigslist scraping capabilities but are generally limited in scope and data volume.

To explore more options beyond Craigslist, see our complete breakdown of the best scraping tools for different use cases.

Craigslist API alternatives

While Craigslist does not provide an official API for data extraction, some third-party services and tools attempt to fill this gap:

- Unofficial Craigslist APIs: Some developers have created unofficial APIs that scrape Craigslist data and expose it via REST endpoints.

- Data Aggregators: Platforms that collect Craigslist data and offer it via their own APIs.

- Limitations: These alternatives may violate Craigslist’s terms and can be unreliable or shut down.

Step-by-step: How to scrape Craigslist

1. Set Up Your Environment

Install Python and the Scrapy framework. These will form the foundation of your Craigslist scraper.

2. Integrate Multilogin

Use Multilogin to create an antidetect browser profile with built-in proxy support. This helps avoid IP bans and keeps scraping sessions isolated. Multilogin can be controlled through its API, allowing you to launch profiles programmatically before running your spider.

3. Create a Scrapy Project

Initialize a new Scrapy project to organize your spiders, settings, and pipelines.

4. Define a Spider

Write a spider targeting Craigslist URLs (e.g., housing, jobs, or services). Configure it to crawl across multiple regions if needed.

5. Parse the Data

Extract important fields such as:

- Post I

- Title

- Price

- Location

- Description

- Images

6. Automate with Selenium or Puppeteer (Optional)

If you need to handle dynamic content or complex interactions, run your scraper through Multilogin profiles using Selenium or Puppeteer. This ensures greater reliability and stealth.

7. Run the Spider

Execute your crawler and store the scraped data in a structured format like CSV, Excel, or a database for further analysis.

Techniques for Searching All Craigslist USA Regions

Craigslist is divided into numerous regional sites, each serving a specific city or area. To search all of Craigslist USA or perform an entire Craigslist search, consider these strategies:

Use a Craigslist Area Map: Visualize regions with a craigslist region map or craigslist area map to identify all city sites.

- Automate Multi-Region Scraping: Configure your scraper or crawler to iterate through all regional URLs.

- Aggregate Results: Combine data from all regions into a single database for comprehensive analysis.

- Search by Post ID: Use tools to look up Craigslist post ID across regions if you have specific listings to track.

Regional Mapping Strategies for Craigslist

Understanding Craigslist’s geographic segmentation is key to effective scraping and searching.

- Map of Craigslist Cities: Craigslist organizes listings by city and metro area.

- Craigslist Region Map: Use official or third-party maps to identify all active Craigslist sites.

- Craigslist Searches Across Regions: Some tools allow you to perform automatic Craigslist search across multiple regions simultaneously.

- How to Search Entire US on Craigslist: By combining regional URLs or using specialized scrapers, you can cover the entire US Craigslist network.

Automation Methods for Craigslist Scraping

Automation is essential for efficient Craigslist data extraction.

- Scheduled Crawlers: Set up your craigslist crawler to run at regular intervals.

- Automatic Craigslist Search: Use tools that support keyword alerts and auto-updates.

- Data Export Automation: Integrate scraping tools with databases or cloud storage for seamless data flow.

- Best Craigslist Search Tool: Choose tools that balance ease of use, speed, and compliance with Craigslist’s policies.

Conclusion

Craigslist scraping, when used responsibly, transforms scattered listings into structured, actionable insights. Whether for lead generation, market research, or automation, the right tools—ranging from Python libraries to no-code solutions—make the process accessible to both developers and non-coders. By respecting legal boundaries and leveraging smart automation, you can turn Craigslist’s vast marketplace into a powerful source of data-driven opportunities.